Hello!

I’m using a triangular mesh to seed a shape in a MPM simulation. Is there a way of moving the mesh together with the particles? (I mostly need the mesh for rendering, but also for computing stuff like its curvature)

Thank you in advance!

roberto

Hi! I’m not super sure how @yuanming made this cool image at that moment, but if you want to render 3D meshes, hope my extension library Taichi THREE, despite it’s still under-development, could helps ![]()

https://github.com/taichi-dev/taichi/blob/master/examples/mass_spring_3d.py

In my case, the starting point is a mesh. I used code from taichi_elements to voxelise the mesh and generate the MPM points. After that, my algorithm deforms the mesh, quite massively ![]() . At the end of the simulation, the points had move well far away from their original position. I would like to deform the mesh accordingly.

. At the end of the simulation, the points had move well far away from their original position. I would like to deform the mesh accordingly.

I was thinking to represent the position of each vertex in the original mesh as a linear combination of the k>=3 nearest MPM points. At the end of the simulation, I would compute the new position of the mesh vertices using the weights of the initial linear combination. Does this sound sensible to you? I’m afraid that computing k nearest neighbours with so many points and vertices may be super slow ![]() …

…

Yes, that’s indeed super slow, but you may use a neighbour lookup table or octree for quicker lookup, e.g., pseudo-code:

for i in pos: # scan neighbours

x, y = int(pos[i])

ti.append(neighbour, [x, y], i)

Hello! I found a way of deforming the mesh, and it works like a charm ![]() . I thought I would share it here in case it can help someone else.

. I thought I would share it here in case it can help someone else.

Here’s my setup: I have a mesh with vertices v and faces f, which I use to seed particles p. The particles get deformed, and turn into q. And what I want is to move the vertices v following the deformation of p into q.

There’s two steps: first, i compute k neighbouring points p for each mesh vertex v (using a kdtree). Second, I use moving least squares to compute a deformation using the k p neighbours of each vertex as control points.

Here’s the complete algorithm:

import numpy as np

from sklearn.neighbors import KDTree

# you need to get the following numpy arrays

# v: mesh vertices

# p: initial particle positions

# q: final particle positions

# The new vertices will be in V

k=5

tree = KDTree(p)

dist, ind = tree.query(v, k=k)

alpha = 1

w = np.array(1/dist**alpha)

w[w==np.inf]=2**31-1

V = v.copy()

for index in range(len(v)):

vi=v[index]

pi=p[ind[index]]

qi=q[ind[index]]

wi=w[index]

pcentroid = pi.T.dot(wi)/np.sum(wi)

qcentroid = qi.T.dot(wi)/np.sum(wi)

ptr = pi - pcentroid

qtr = qi - qcentroid

M = ptr.T.dot(np.diag(wi).dot(ptr))

detM = np.linalg.det(M)

if detM < 1e-8:

V[index] = vi - pcentroid + qcentroid

else:

invM = np.linalg.inv(M)

for j in range(5): # k = 5

res = (vi-pcentroid).dot(invM.dot(wi[j]*ptr[j]))

A[index,j] = res

V[index] = np.sum(np.diag(A[index,:]).dot(qi),axis=0) + qcentroid

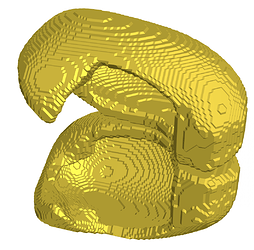

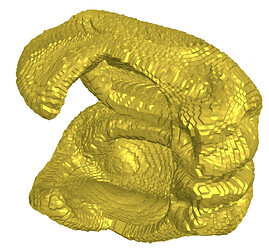

Finally, here’s pictures of one of my brain meshes before and after deformations.

I realised that most of the times detM<1e-8, and the code was simply doing an average of the k nearest neighbours.

Here’s an improved version: Instead of Moving Least Squares, I compute a polar transformation of the original k-nearest particles to their final positions, plus a scaling factor. I then apply that rotation and scaling to the vertex. Here’s the new code:

import numpy as np

from sklearn.neighbors import KDTree

from numpy.linalg import svd

# you need to get the following numpy arrays

# v: mesh vertices

# p: initial particle positions

# q: final particle positions

# The new vertices will be in V

def polar(P, Q):

'''

Input: moving and reference Nx3 arrays of coordinates

Returns 3x3 rotation matrix and linear scaling

'''

# scale

TP=P.T.dot(P)

TQ=Q.T.dot(Q)

s = (np.linalg.det(TQ)/np.linalg.det(TP))**(1/2*1/3)

# rotation

T = Q.T.dot(P)

U,_,V=svd(T)

R = V.T.dot(U.T)

return R, s

k=10

tree = KDTree(p)

dist, ind = tree.query(v, k=k)

alpha = 1

w = np.array(1/dist**alpha)

w[w==np.inf]=2**31-1

V = v.copy()

for index in range(len(v)):

vi=v[index]

pi=p[ind[index]]

qi=q[ind[index]]

wi=w[index]

pcentroid = pi.T.dot(wi)/np.sum(wi)

qcentroid = qi.T.dot(wi)/np.sum(wi)

ptr = pi - pcentroid

qtr = qi - qcentroid

vtr = vi - pcentroid

R, scale = polar(ptr, qtr)

V[index] = scale*vtr.dot(R) + qcentroid